OTA Performance Alert & Monitoring

A portfolio case study: designing an automated monitoring system that detects visibility failures, conversion leakage, and early-warning trends across online travel agencies.

The Problem

OTA platforms provide weekly performance metrics across visibility, engagement, and conversion, but these signals are typically reviewed in isolation and without automated thresholds. As a result, systemic failures (e.g., listings with zero appearances or sustained low conversion) are detected late, if at all. The absence of an integrated alerting framework—one that accounts for eligibility, streaks, and historical baselines—creates both blind spots and alert fatigue. Teams need a scalable way to convert raw OTA metrics into prioritized, owner-aware alerts that reflect true revenue risk.

Visibility failures are binary

When a listing disappears from search results or receives zero views, the outcome is simple: guests can’t book it.

These issues often map to breakage (mapping, availability sync, calendar, rate plan failures) and should be treated as highest severity.

Conversion failures are fixable leakage

When demand exists (views) but bookings lag, the root cause is often correctable: pricing, fees, policies, content, or LOS restrictions.

Detecting sustained low conversion early creates high-ROI optimization opportunities.

The Solution

Explainable rules first — designed to scale into smarter detection.

Alerting

High-severity rules automatically generate tickets and route to accountable owners (Ops / Distribution / Revenue).

Monitoring

Lower-confidence signals land in a watchlist. Operators can create ad-hoc tickets after reviewing context, preventing alert fatigue.

Learning loop

Ticket outcomes feed back into the model over time, enabling smarter prioritization and early-warning prediction.

Signal Hierarchy (Non-Technical Friendly)

Bucket signals by where the guest drops off: find → view → book → trend.

Tier 1: Visibility

Critical- Metrics: Appearances, Views

- Meaning: Guests can’t find or open the listing

- Example rules: 0 appearances for 2 periods; 0 views for 2 periods

- Action: Auto-ticket (highest severity)

Tier 2: Conversion

High ROI- Metric: CVR (bookings ÷ views)

- Meaning: Demand exists, but bookings aren’t converting

- Example rule: CVR below threshold for 3 eligible periods (min views)

- Action: Route to revenue/pricing optimization

Tier 3: Trend Signals

Monitor- Metrics: Z-score, streaks, week-over-week declines

- Meaning: Early degradation that may become a Tier 1/2 issue

- Example: Appearances down 70% WoW; views declining 2 weeks

- Action: Watchlist + context enrichment

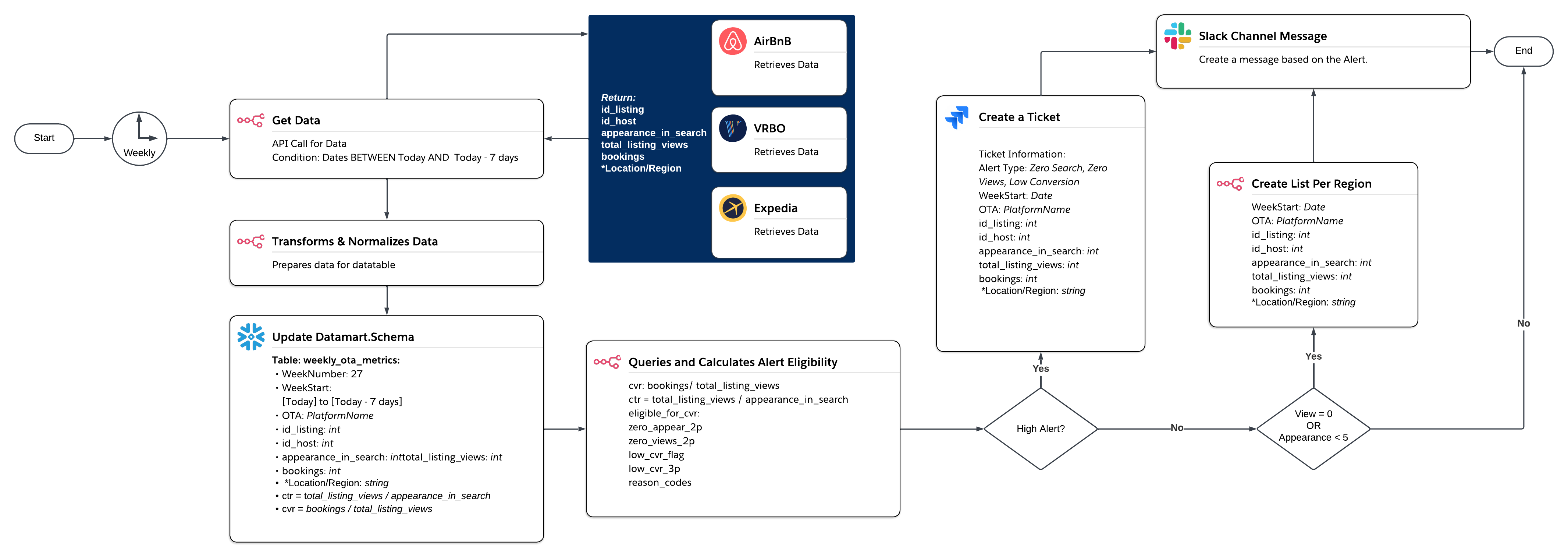

System Architecture

End-to-end workflow: ingest → warehouse → compute → ticket → communicate.

Workflow (high level)

Retrieve weekly metrics from OTAs, standardize into a common schema, compute per-listing KPIs and baselines, then create tickets + notifications for owners.

The design emphasizes low manual effort, clear accountability, and explainable alert rules.

Error handling & guardrails

If upstream data is missing or delayed, the system degrades gracefully (hold alerts, log failures, notify ops). Minimum-data eligibility rules reduce noise (e.g., minimum views before evaluating CVR).

Key Features

What makes this monitoring system operationally useful.

Alert-to-ticket routing

Each alert type maps cleanly to an owner and likely fix path, reducing back-and-forth and speeding time-to-resolution.

Priority scoring (optional)

Alerts can be ranked using estimated impact and severity to keep teams focused on the highest NetRevPAR risk first.

Agent-ready foundation

Resolution outcomes become training data for an early-warning layer that predicts risk before a listing goes fully dark.

Roadmap

How this evolves from rules → richer signals → predictive monitoring.

Phase 1: Rules + ticketing

Launch explainable alerts (visibility + low conversion with eligibility thresholds). Implement ownership, SLAs by alert type, and a consistent communication path.

Phase 2: Enrichment

Add supporting signals like pricing changes, fees/policies, LOS restrictions, and availability patterns to improve diagnosis and reduce false positives.

Phase 3: Early-warning agent

A lightweight agent reads historical alerts + resolutions, detects precursors to visibility failures, and produces risk scores. Human approval remains the final gate.

Phase 4: QA + calibration

Periodic threshold tuning, precision/recall tracking, and feedback loops ensure the system remains reliable as seasonality and portfolio mix change.

Get in Touch

Interested in building automation-first monitoring systems?

Email Sarah at sarah.brown@naturallylogical.com

Note: This portfolio page intentionally omits company-specific identifiers and operational details.